It's official now. ETC 2020 is now over, and there's no more stretching it for me. It's been a wonderful conference, where I got to meet new people and some I've met before as well as learn about new ideas, skills and tools. All in all, those have been a packed couple of days, in the best way possible.

The conference has started with a talk by

Mirjam Bäuerlein on TDD, and dogs. The talk, as it is, was presented in a skillful manner and was a good overview on what TDD is and covered the "why" on top of the "how". Personally, I didn't connect with the dog theme and didn't really see a new insight on the topic emerging from this unique perspective. I think that as a keynote I expected a bit more out of this talk: I like keynotes to be inspiring or perspective changing, which wasn't the case for this one. Judged as a track talk, however, it was a very good talk with a subject that is quite important, even if it might be more familiar to regular conference attendees (And frankly, we had roughly 1/2 of the people at the conference there for their first time).

After a short coffee break it was time for a regular track talk - I started with

Jeremias Rößler's talk - Test Automation without Assertions. The talk, as far as I could tell, was a presentation of a specific tool called

Recheck-Web which he has created. As far as I could understand it's a combination of a stability-enhancer and a approval tool integrated into selenium. It was a decent presentation, but I could see that there's no real value for me in it, as I'm already familiar with the concept of approval testing (and visual validation), and I don't really like talk focused around code, since they are difficult to execute. Also, I have a request for all future speakers discussing coding related issues - There are a lot of bad examples in the coding space, especially around test-code. Please don't contribute to this pool without clearly marking "here's some bad code". If you want to suggest why your solution is a good thing, compare it to the best code possible without it.

So I left and went to check out another talk. As I was planning to attend the PACT workshop, I thought I might go and check out the talk about the same topic. Have I mentioned that coding talks are hard to pull off? Well, the part I entered was where a speaker stood with an open IDE and mumbled "here's how you can do this" for several times. It might have been a great talk for those who were there from the start, but I stayed 5 minutes to see if the talk would get to more interesting things than code, and left this one as well. The next event was speed meet. Those who read my ETC posts in previous years know that it can get a bit too crowded for my liking, yet this year felt slightly less so - I was able to bot

h talk with some people and not end up so exhausted I needed to take some time-off at a corner to recharge. I even managed to remember a name or two by the end of it. After the speed meet there was time for lunch, and I spoke a bit with

Kaye and hear about their story of moving to the Netherlands.

Shortly after lunch it was time for the workshops. Due to a last minute change in the rooms I found myself in

Fiona Charles' workshop titled "Boost Your Leadership Capability with Heuristics". It wasn't what I planned to go to initially, but despite having about five minutes before the actual start of the workshops, I decided to stay and learn. The workshop itself was meant to break down the vague concept of leadership down to more useful components and notice that most leadership qualities and practices are heuristics, which makes them fallible and means we should be noticing when it is suitable to use certain of them and when it wasn't. For instance, providing feedback is generally seen as a good thing, but it is not suitable for cases where one does not have the credibility to provide feedback, or when the other side does not have the attention span to process it. Sadly, the time constraints were a bit too harsh on this workshop - we managed to complete a single exercise, and we were missing a bit of direction and maybe a summary from Fiona that would help participants notice what was it we did and how to carry this forward. I believe my explanation above is where the workshop was aimed, but I might be missing it completely.

Next on the menu -

Hilary Weaver-Robb's talk on static analysis. It was a well rounded introduction to the concept of the topic, and really left me confident about starting such a thing back at home (next task - find some time to do that). In addition, Hilary divided the different types of outcomes we can expect to get from a static analysis tool - probably bugs, security vulnerabilities, code smells and even some performance enhancements. Each of those categories have a slightly different urgency to it, but the general thing to do about each of them is "understand, prioritize, fix". In most cases, one can skip just to "fix" since the tools are pointing quite clearly to a well defined issue. In some cases it exposes problems that are more complex to fix and one might not want to do right away. We also mentioned the difference between linters that show up issues as one is coding, and analyzers running on the entire code base. All in all, I liked this talk very much.

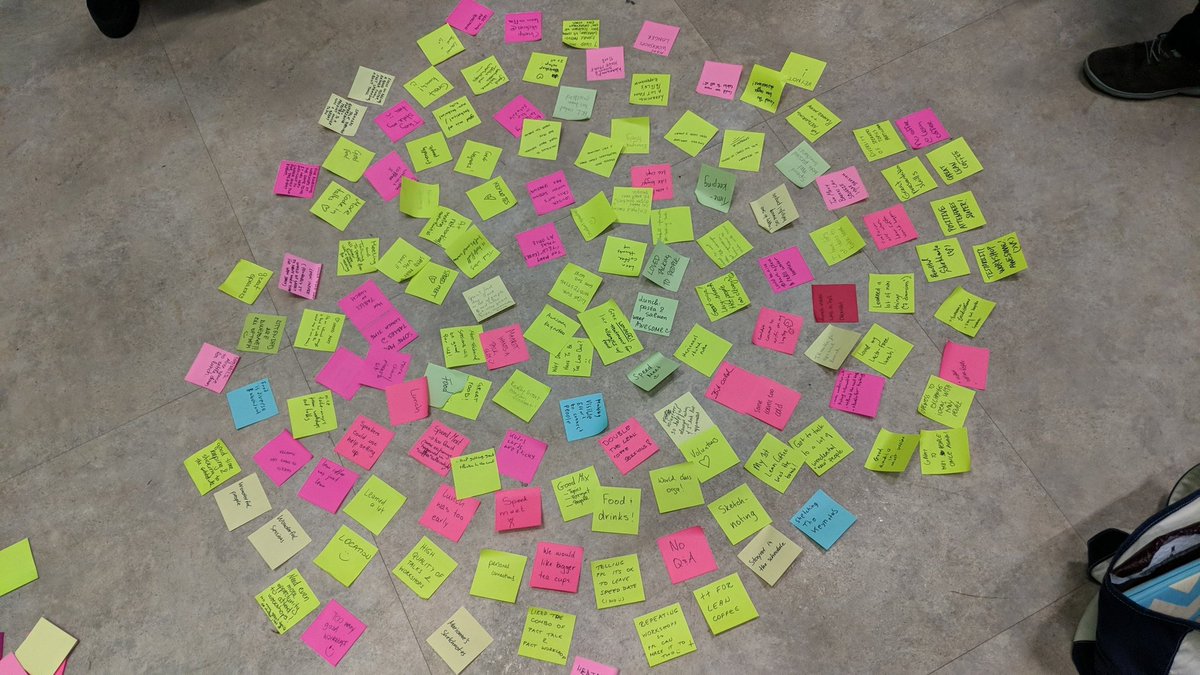

Finally, it was time for lean coffee and the closing keynote. Lean coffee was, as usual, very interesting. It was a bit off that I was the only one with more than one topic suggested, but fortunately the topics presented by others were so good that only one of my topics actually made it to the discussion. Co-facilitated by Mirjam &

Gem, everything went smoothly enough, with the one usual caveat of not having enough time for this activity.

The closing keynote for the day was given by

Maaike Brinkhof and was confusingly titled "Using DevOps to Grow". Why confusing? Because in fact, this talk is not very much about DevOps, which exists in the background, but was in fact about growing professionally, and learning to expand beyond the narrow definitions of one's role. Sure, DevOps helps, as there are many collaboration opportunities, and as the role of a dedicated tester becomes even more narrow and other needs such as good monitoring, simply presents themselves, but one does not need to wait for DevOps to achieve such progress. At the end, it all comes down to working together as a team and focusing on value rather than on roles. This message, while it's not the first time I hear it, is extremely important, and is new enough to actually change the way people might think. As keynotes go - it was definitely a good one, and it was important to hear it during the conference, to encourage people to step outside of their familiar comfort zone.

End of day one, more or less - there was still some socializing with some drinks and refreshments happening for a couple of more hours, and then I went out for dinner with a bunch of cool people as well.

I managed to get to my room by the early hour of 23:30, and even thought I might end up going to sleep early. We;ll ignore my optimism for a moment and skip ahead directly to the next day, starting with the opening keynote of the day which was an overview of application security. More specifically,

Patricia Aas presented her take on how DevOps culture changes the way application security is taken care for, with 6 ground rules to actually make it work: Live off the land (use the tools already in place, don't add your own tools and ask the developers to work with them), Have Dev build it (because you don't have time to do that yourself, also they will be more committed to it if it's theirs), Trunk based development (This one is just a tip taken out of "Accelerate", I don't think it has anything to do with security, but the jist of it is to have small chunks of code review so that reviewing them for security is feasible), Use existing crisis process (and train for it, since people revert to their instincts when crisis hits), Automate as much as possible (and I would add - as much as sensible) and treat your infrastructure as code (since, you know, it is a part of your code, and needs to go through the same system of checks and deployment process). All in all, it was interesting to get the perspective of a security person on a development process. One particular point I connected to was her story of origin - starting as a developer on a company that was constantly hit by attacks, everyone in that team learned about application security, and from there she continues to the security path. Having worked on a regulated product for ~7 years, it felt very natural to me.

After the keynote it was time for the workshops again. Having the workshops run twice is a really nice thing, as one can gather impressions from the attendees of the previous day and the inevitable dilemma of "Out of these 5 amazing activities I really want to attend 3, and they're all at the same time". This way we can choose 2 of those sessions. I went to the workshop on Pact given by

Bernardo Guerreiro. To be frank, it wasn't very much of a workshop as it was a live demo on the tool. Despite being a difficult feat, Bernardo managed to produce a good code intensive talk. Moreover, given the limited timeframe of the workshop, I believe it was the best possible choice. Bernardo walked us through an overview of the entire process of deploying Pact in your pipelines, so that we could get a good grip on what capabilities the tool had and what problems might occur as a result of mistakes made at the beginning so that we can learn from them. One thing that would have made the workshop perfect for me would have been a sign of "what are we aiming to achieve" and perhaps a handout of the different phases in the journey to help people remember the different insights, as they all played out very naturally during the workshop. I stayed a bit after the workshop to chat with Bernardo and we ran a bit late to lunch, which for me is an indication something is going right in this conference.

After lunch I attended

Gem Hill's talk about "Value and Visibility; How to figure out both when you don't do any hands on testing", short version: As you move to a more senior tester role, your job looks a lot different than what it was before and you might feel as if you're not doing anything and wonder where your time is slipping away. With the relevant differences, that's a pretty good description of feelings I had during my last couple of years in my previous workplace, and then again once I joined my current place and found myself in a completely different setting - working outside of multiple teams instead of embedded in one. Gem shared some strategies that worked for her - noting on paper what she has achieved instead of only what she planned is one that I remember. Another thing was just the realization that work looks differently.

The final track talk I attended was

Crystal Onyeari Mbanefo's talk: "Exploring different ways of Giving and Receiving feedback". The topic itself is quite important, but on a more personal level, I did not connect with the way it was presented. It felt a bit too dry and rule driven, with no real story to hold everything together, and the division between feedback to a peer, to a manager (or someone more senior) and to someone down the food chain wasn't very useful to me. Probably, what I missed the most was a sense of purpose. We ditched a goal oriented definition of feedback and used instead one with no obvious purpose (it can be seen in

this slide). For me, feedback with no intended goal is less valuable - I give feedback because I want to change a specific situation, or because I want to help someone learn, or because I want to encourage them for something they did. If I have no goal in mind, I can't really know if my feedback was effective, and it is not very different than any sore of observation or rant.

From that talk I went on to the open space, where I skipped raising a discussion around "quality is a toxic term", which quite frankly, is more suitable for a lightning talk. Instead I got to attend two awesome activities: The first one was a sketchnoting session by

Marianne who has sketchnoted the keynotes for this conference as well. now I have a strategy on how to create my own sketchnote.

The second discussion I attended was a game of value. The idea of the game is to do some "work" (passing on some poker chips) and getting "paid" by the customer. The goal of this game is to practice learning about the customer value while still producing something. Short version - we failed miserably. I still managed to learn quite a bit from it - first thing is that without getting some feedback from the customer we waste effort (someone paying us, by the way, is one sort of feedback). Another thing I've learned was that we don't know the parameters we can tweak, nor what are the variables that have an impact on the value - is it time of delivery? predictability? new functionality? snazzy UI? Can the value of those parameters change over time? Maaret wrote about it in more details right

here. the final slot in the open space was one I added to the board, with a call for help - We have at work a lot of changes that needs doing, and I've never actively persued a culture change in an organisation, let alone do that in a bottom up fashion. So I asked for help in order to see if anyone has some ideas about how to plan for such a thing, and how to track our progress. It was an interesting discussion, and I had some tips on things I might do, but with the short time we had, and my deficient facilitation skills, it wasn't wrapped up to something whole that I can take and say "now I know where I'm headed". I did gain some insights that I still need to process.

Open space was done, time for the closing keynote: "The one with the compiler always wins" by

Ulrika Malmgren. I still think it could be a title for a talk in a python conference (and for all you pythonistas out there, I know python is technically compiled, just not in any way that is helpful for me), but in fact it was a reminder of how, at the end of all ends, the person doing the coding has a lot of power in their hands - they may deviate from pre-agreed design, add small features they like in between their tasks, and raise the price of tasks they dislike. This power means there are consequences to how we act (or, if you are not part of building your product, on how your devs work). Inspired by Marianne's mini-workshop, I made a small

sketchnote. It is still a lot more practice hours before it can be compared to a

proper one, and I'm unsure if the experience is something I enjoyed enough to repeat. Note taking, in any format, tends to distract me from listening (now, combine that with poor memory, and let the fun begin), but it was interesting to see what I could do with a small set of tricks and a piece of paper.

And that's it for the conference - lights out, people going home, and all of those. I stayed a bit to see if I could help tidying after us and after dropping several things to Marianne's car, I went to join a lot of people for dinner (I'll skip names, but you can see some of us

here). A really nice surprise was when a small delegation from

DDDEU came to say hi from the other side of town, so I got to say hi to

Lisi Hocke as well. After dinner was done, we moved the conversation to the hotel's lobby and then just talking about a variety of things (From concrete feedback on a talk, to

différance and

speech act) and at some moment there were only 4 of us left -

Jokin,

Markus,

Maaret and myself. As it usually happens in such conversations, I learned a lot. For instance, I learned that creating a safe space for attendees involves also caring for the content and presentation of the talks, and we had an intersting discussion around connecting people online (I'm still not a fan, but I can see now how it can work for some people), about CV filtering (We all agreed that it can cause a lot of missing out on great people, we differ in the question of whether we are willing to pay the price of using a more accurate but more time-costly predictor) and much of my insights from the game in the open-space came in this conversation where I learned about the various factors we should have considered, and about the options we had hiding in plain sight. Suddenly, it was 2:30 AM, so sleep time it is.

I still managed to extend the conference feeling for one extra day by meeting people for breakfast, then walking around in Amsterdam a bit, both alone and with

Mira and by the evening we had dinner and were joined by

Julia and her family, but that's ETC 2020 for me. It was a great mix of learning, meeting people and fun.